Deep Dives: Windows Into My Work

Here are some practical examples of completed work including some with links to video walkthroughs and where appropriate links to code.

Read Additional Deep Dives On My Blog

- Secure-Built-In, Presume-Breach CI/CD Integration to Production in AWS

- Initiated Product Ownership and Architected and Coded

a Rugged and Reusable Deployment Automation Framework

- Pushing Bash Skills With A Dual-Coded Open Source Solution

- Initiated OSS: Security Utility For Returning WinRM Config to Pristine State

- Mentoring Teammates to Adopt PowerShell

- PowerShell Automation Framework for Enterprise Deployment and Config.

- Root Cause Analysis for Non-Admin Reboot of Production Server

- Company-wide PowerShell Advocacy

- Visual Concepts: Redirecting a "Corporate Initiative" Freight Train in 10 Minutes

- Secure-Built-In, Presume-Breach CI/CD Integration to Production in AWS

- Initiated Product Ownership and Architected and Coded

a Rugged and Reusable Deployment Automation Framework

- Pushing Bash Skills With A Dual-Coded Open Source Solution

- Initiated OSS: Security Utility For Returning WinRM Config to Pristine State

- Mentoring Teammates to Adopt PowerShell

- PowerShell Automation Framework for Enterprise Deployment and Config.

- Root Cause Analysis for Non-Admin Reboot of Production Server

- Company-wide PowerShell Advocacy

- Visual Concepts: Redirecting a "Corporate Initiative" Freight Train in 10 Minutes

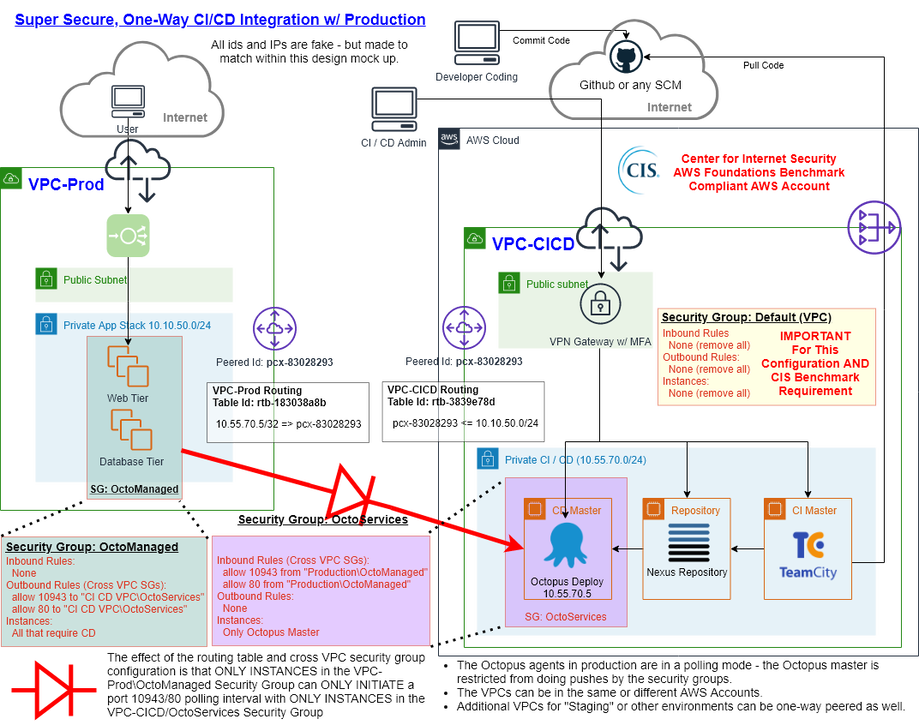

Secure-Built-In, Presume-Breach CI/CD Integration to Production in AWS

Situation:

- The company wished to ensure that CI / CD resources were deployed to a production managed account.

- They also wished the solution to require a new level of access security for human access and to follow the security principle of Presume Breach by being as isolated from production as possible.

- Scope: The CI / CD resources would need to access existing production AWS accounts with over 400 servers in various functional tiers. Some of these environments were multitenant while others were single tenant.

- Create and configure a CI / CD environment that could service production, but be extremely secure.

- Ensure the solution could be implemented with the least possible changes to production - otherwise it would never be implemented if it was complex or perceived to introduce security risks.

Action:

- I architected and diagrammed the approach and worked with relevant Ops and business leaders to gain support for the approach.

- I configured a new AWS account that was compliant with the CIS AWS Foundations Benchmark using IaC code I wrote for that purpose and configured it with MFA on a VPN gateway - both were huge improvements over prior AWS account implementations.

- After VPC peering to the accounts, I configured the route table in the Production account to only allow the subnets with the managed servers to talk to a single Octopus Master in the CI/CD VPC. The route table in the CD/CD VPC only allowed reaching back to the subnets of production that contained managed servers.

- A new security group was created in the CI/CD VPC for the OctopusMaster and a new security group was created in Production for the CICDManaged instances.

- The OctopusMaster Security Group only allowed ingress on ports 10943 and 80 from the new CICDManaged security group in the production VPC.

- The CICDManaged security group in the Production VPC only allowed egress of ports 10943 and 80 to the OctopusManaged security group in the CI/CD VPC.

Results:

- By implementing the CI/CD VPC in a fresh AWS Account, I was able to

- deliver results quickly without complications of needing access to production and risk reconfiguring production security.

- locked down the new account to new security levels during build - specifically level 2 of the CIS AWS Foundations Benchmark. MFA on a VPN gateway provides an additional layer of protection (avoiding network level VPN).

- minimize production changes to a very safe: a) a new VPC peering, b) a new route table, c) a new security group with only egress rules on only two ports

- The inter-VPC routing tables and cross vpc security groups work together to create a CI/CD environment that is one-way polled by production only on the ports of the CD system.

- In the event of breach, either side can disable the two port, one way connection with a single security group change.

- The one attack vector available from CI/CD requires creating and deploying an Octopus Deploy job that production consumes - this would still require breaking into VPN (w/ MFA) and then breaking into Octopus Deploy.

- If deployments are scheduled events (rather than continuous), the production side routing table or security groups could be automated to only allow connection for periods of known deployment events.

- If the VPCs are only accessed by separate teams, this setup also creates a reasonable separation of concerns.

Visual Results:

(Click Diagram to Zoom In)

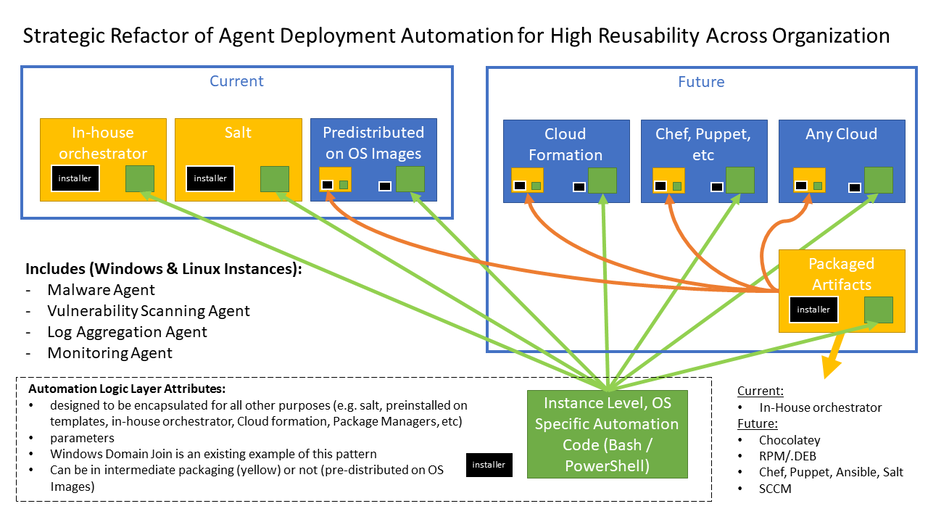

Initiated Product Ownership and Architected and Coded a Rugged & Reusable Deployment Automation Framework

Situation:

- Scope: The company leveraged over 30,000 Windows and Linux instances (50/50 mix) across hundreds of SaaS development teams staffed by over 3000 active developers. These 30,000 instances were required to run standard DevOps agents for malware protection, vulnerability scanning, monitoring and log aggregation.

- Scope: These instances were running Windows 2008 R2, 2012 R2, 2016 and Amazon Linux, CentOS 6, Oracle Enterprise Linux 6, CentOS 7 and Amazon Linux 2.

- Product Ownership Challenge: The software deployment automation used to install these agents was managed as shared source effort between central tooling teams and users in SaaS application development teams.

- Product Ownership Challenge:The lack of ownership resulted in an offering that had low value through inconsistent quality, inconsistent functionality and inconsistent release cadence as it was only updated when absolutely required and then the updating party was only interested in fixing or enhancing their specific deployment scenario. Testing was not done across all os variations for each change and the changes were not adequately communicated to users.

- Architecture Challenge: The deployment automation was built as in-line code to a specific Infrastructure as Code toolchain and architecture that was designed to migrate the automation tooling toward full-on IaC, DevOps, Cloud native. This over-coupling meant that the deployment automation was not available to over half of the systems that were supported by automation outside of this preferred toolchain.

- Production Operations Challenge: Due to the lack of consistency and quality, this deployment automation was frequently at fault during the promotion of SaaS releases into product environments. The DevOps agent stack was required, but not reliable.

- Product Ownership Challenge: The DevOps agents versions being installed would fall behind to unacceptable levels before addressed because there was no proactive management to regularly incorporate new versions of the agents an

- Product Ownership Challenge: bug reports and enhancement requests languished.

- Product Management Challenge: Functional innovations in the deployment automation were not pushed to both OS families nor across other DevOps agents using the same automation.

- Operations Challenge: Teams who attempted to report problems did not have good log data and many escalations reveled environment setup problems. In addition, they were frequently deploying in environments which our team did not have access to.

- Product Ownership Challenge: Much of the information developer customers needed to be successful in using this deployment automation was tribal knowledge and was not clearly documented in an easy to find place.

Task:

- Improve the situational short comings and add new customer value through proactive product ownership and design changes.

Action:

- I initiated, proposed and successfully acquired ownership of the automation by the team that I lead for the DevOps agents that must be installed on all instance endpoints.

- I architected a Rugged and Reusable Framework for the code that:

- Was branded "Rugged and Reusable Framework" so that the entire company could observe whether the design made any substantial differences to quality and functionality.

- Uncoupled the automation code from the preferred DevOps automation toolchain and moved it into shell scripts - this allowed it to be used with most any orchestrating or artifact packaging technology that might be in use in the company.

- For the preferred DevOps automation toolchain, packaged artifacts were still built in a 100% backward compatible way. (and now tested thoroughly).

- Implemented intelligent environment testing with verbose logging - the human procedures we would use to diagnose recurrent environment setup problems (not a problem with the actual deployment automation) were made into code and the success or failure of these tests was output to the logs with hints about what might be wrong with the environment (e.g. a simple TCP connect test would isolate and log network configuration problems to prevent a failure that looked like an installation failure if the installer was run in this circumstance.)

- Implemented self-scheduling to enable lightweight self-monitoring and self-healing.

- Ensured parity functionality for Windows and Linux and across all of the DevOps agent stack.

- Enabled rapid onboarding of new DevOps - for instance when the company change antivirus products from one vendor solution to another.

- I coded the Windows template and oversaw the Linux template for this deployment automation to:

- Enable team knowledge sharing of best practice code and standard functionality.

- Enable rapid development of new required agents (e.g. swapping CrowdStrike for TrendMicro).Automated testing was a first-class citizen.

- Incorporate version metadata and changelogs as a part of the regular development.

- The team followed a more disciplined development process that ensured:

- QA testing of all affected OSes upon automation updates and and when OS image templates were released with new patches.

- Releases of the underlying software were done on a regular cadence.

- Release announcements were made in a special, subscribe-able channel in the chat ops support system.

- I documented how teams should assess and safely adopt new versions of this code during their development cycles.

Results:

- This critical deployment automation became a reliable and flexible automation asset that could be used across multiple orchestration technologies and was regularly updated with the latest software binaries.

- Adoption by those not on the preferred orchestration tooling was immediate which doubled the scope of value delivery to the company by doubling the number of machines that could use it from about 15,000 to 30,000.

- Problems caused in production dropped off due to better testing and better information for teams.

- Dev Team end-users who had environment configuration problems frequently figured out their own problem by examining the verbose, self-diagnostic logging.

- Due to the verbose, self-diagnostic logging our team could frequently complete root cause analysis simple from the logs submitted on support tickets.

Visual Results:

(Click Diagram to Zoom In)

Pushing Bash Skills With A Dual-Coded Open Source Solution

Situation:

EBS volumes require initialization when they are stored in snapshots in S3 - basically any AMI that has disks attached.

Scope: Any instance running in AWS - actual testing: PowerShell 4 (Server 2012 R2), PowerShell 5.1, PowerShell Core 6.0.1 (On Windows), CentOS 7, Ubuntu 16.04, SuSE 4.4 Leap 42, Amazon Linux and Amazon Linux 2.

The initialization problem has the following characteristics and challenges :

Task:

I devised a design that:

Action:

I created, coded and tested the solution for Bash and Windows and built my Bash best practices snippet library in the process.

It was tested on PowerShell 4 (Server 2012 R2), PowerShell 5.1, PowerShell Core 6.0.1 (On Windows), CentOS 7, Ubuntu 16.04, SuSE 4.4 Leap 42, Amazon Linux and Amazon Linux 2.

Results:

I was able to simultaneously build a very useful Open Source solution, stretch my Bash skills and build a best practice Bash snippet library.

More Details:

The Deep Dive Blog Article for this code here: https://cloudywindows.io/post/fully-automated-on-demand-ebs-initialization-in-both-bash-for-linux-and-powershell-for-windows/

The Code is here: https://github.com/DarwinJS/DevOpsAutomationCode

Information on using the solution is embedded in the code as help and can be access by opening the code, or with these command lines:

#PowerShell on Windows:

Get-Help InitializeDisksWithFIO.ps1

#Bash on Linux:

./InitializeDisksWithFIO.sh -h

EBS volumes require initialization when they are stored in snapshots in S3 - basically any AMI that has disks attached.

Scope: Any instance running in AWS - actual testing: PowerShell 4 (Server 2012 R2), PowerShell 5.1, PowerShell Core 6.0.1 (On Windows), CentOS 7, Ubuntu 16.04, SuSE 4.4 Leap 42, Amazon Linux and Amazon Linux 2.

The initialization problem has the following characteristics and challenges :

- It may need to run for a very long time, longer than all other automation.

- It can run in parallel in the background.

- Can be throttled to prevent dominating the instance.

- If the building of the machine requires a reboot (more likely on Windows), EBS initialization should restart and finish it's work.

- I had been thinking of writing the same tool in both PowerShell and Bash as a way of pushing my Bash skills and building a best-practice Bash snippet library and to have qualified comparisons of the two languages.

Task:

I devised a design that:

- uses the open source disk testing utility called FIO.

- can be throttled (via FIO feature).

- initializes multiple devices in parallel (via FIO feature).

- The solution would use a schedule task and a "finished" flag file to accomplish parallelism as well as restart resilience.

- Autodiscovers all disks or takes a stipulated list, including graceful skipping of non-existent disks.

- Can be scheduled up to 59 minutes in the future (to offset machine load for shorter automation).

- Could be run from Github or be completely local with no internet dependencies.

- Would have parity functionality in Bash for Linux and PowerShell for Windows.

Action:

I created, coded and tested the solution for Bash and Windows and built my Bash best practices snippet library in the process.

It was tested on PowerShell 4 (Server 2012 R2), PowerShell 5.1, PowerShell Core 6.0.1 (On Windows), CentOS 7, Ubuntu 16.04, SuSE 4.4 Leap 42, Amazon Linux and Amazon Linux 2.

Results:

I was able to simultaneously build a very useful Open Source solution, stretch my Bash skills and build a best practice Bash snippet library.

More Details:

The Deep Dive Blog Article for this code here: https://cloudywindows.io/post/fully-automated-on-demand-ebs-initialization-in-both-bash-for-linux-and-powershell-for-windows/

The Code is here: https://github.com/DarwinJS/DevOpsAutomationCode

Information on using the solution is embedded in the code as help and can be access by opening the code, or with these command lines:

#PowerShell on Windows:

Get-Help InitializeDisksWithFIO.ps1

#Bash on Linux:

./InitializeDisksWithFIO.sh -h

OSS Initiative: Security Utility for Returning WinRM to Pristine State

Situation: Guilding documentation for DevOps Operating System preparation utilities such as Packer, frequently show a simple method for opening up WinRM to allow remote connection. Frequently this involves a completely insecure setup - justified by the fact that the system is in a generally safe environment and only open for a limited period of time.

However, these same guides do not contain information for returning the system to a pristine state. One of the underlying assumptions is that when the OS template is booted, that the new usage will require configuration of WinRM and it will be correctly handled at that time. The fundamental problems with this assumption are: a) that the next user of the template may not need WinRM at all and won't suspect it is defaulting to an insecure state, b) that even if the next user does need to setup WinRM, they won't expect that it has been formerly configured and may miss the fact that some overly permissive settings are already in place. These thinking errors come primarily from the expectation that a "first time boot" (sysprepped) of Windows is in the pristine state of having just been built with defaults.

This challenge is compounded by the fact that there are no APIs or guides from Microsoft for reseting WinRM to a pristine state where it can be reconfigured for use again. There are guides for disabling, but this is not the same - as discussed above, if permissive settings are in play, reenabling causes the permissions settings to be active again.

Typically automation developers will disclaim responsibility for the security state of WinRM upon use of their template - to me that does not seem like an appropriate approach to ownership of a security issue they are creating.

Task: What was needed was a way to reliably reset WinRM to it's "pristine state" - as if it had never been configured in the first place so that the defacto assumption of booting a Windows machine template image was always true (that WinRM was in a factory pristine state).

Action: After consulting Microsoft about any available reset APIs (there aren't any comprehensive resets), I devised a method to isolate and export the WinRM registry keys on a machine that has never had it configured. The reset to pristine involves deleting these keys on the target system and recreating them from the pristine set.

Additional challenges were experienced in that this code cannot be run while connected using WinRM or it will cause an immediate abnormal end of the running automation - preventing the preparation automation from completing successfully. Additionally, scheduling the disablement for the first boot was ill advised in case initial boot automation enabled it right before the scheduled task reset to pristine. So a method to create a self-deleting shutdown script was devised.

Results: The resultant automation is creates a shutdown script to reset to pristine which allows the system preparation automation to continue and successfully exit right as it triggers a shutdown. After the reset, the shutdown automation removes itself from the shutdown script hooks so that it is not triggered on subsequent shutdowns.

This provides a convenient way to reliably reset WinRM to the pristine state that most individuals assume that it is in when booting a templated system image.

While many automators disclaim responsibility for proper WinRM setup upon booting of their template, it is exceedingly hard and an eternal effort to try to educate humans to avoid these type of defacto behaviors - so the net result is insecure systems. By making the system comply with defacto human behavior - all the energy and effort to try to counter it is unnecessary and systems are very practically more secure.

More Details:

The Deep Dive Blog Article for this code here: https://cloudywindows.io/post/winrm-for-provisioning-close-the-door-on-the-way-out-eh/

The Code is here: https://github.com/DarwinJS/Undo-WinRMConfig

However, these same guides do not contain information for returning the system to a pristine state. One of the underlying assumptions is that when the OS template is booted, that the new usage will require configuration of WinRM and it will be correctly handled at that time. The fundamental problems with this assumption are: a) that the next user of the template may not need WinRM at all and won't suspect it is defaulting to an insecure state, b) that even if the next user does need to setup WinRM, they won't expect that it has been formerly configured and may miss the fact that some overly permissive settings are already in place. These thinking errors come primarily from the expectation that a "first time boot" (sysprepped) of Windows is in the pristine state of having just been built with defaults.

This challenge is compounded by the fact that there are no APIs or guides from Microsoft for reseting WinRM to a pristine state where it can be reconfigured for use again. There are guides for disabling, but this is not the same - as discussed above, if permissive settings are in play, reenabling causes the permissions settings to be active again.

Typically automation developers will disclaim responsibility for the security state of WinRM upon use of their template - to me that does not seem like an appropriate approach to ownership of a security issue they are creating.

Task: What was needed was a way to reliably reset WinRM to it's "pristine state" - as if it had never been configured in the first place so that the defacto assumption of booting a Windows machine template image was always true (that WinRM was in a factory pristine state).

Action: After consulting Microsoft about any available reset APIs (there aren't any comprehensive resets), I devised a method to isolate and export the WinRM registry keys on a machine that has never had it configured. The reset to pristine involves deleting these keys on the target system and recreating them from the pristine set.

Additional challenges were experienced in that this code cannot be run while connected using WinRM or it will cause an immediate abnormal end of the running automation - preventing the preparation automation from completing successfully. Additionally, scheduling the disablement for the first boot was ill advised in case initial boot automation enabled it right before the scheduled task reset to pristine. So a method to create a self-deleting shutdown script was devised.

Results: The resultant automation is creates a shutdown script to reset to pristine which allows the system preparation automation to continue and successfully exit right as it triggers a shutdown. After the reset, the shutdown automation removes itself from the shutdown script hooks so that it is not triggered on subsequent shutdowns.

This provides a convenient way to reliably reset WinRM to the pristine state that most individuals assume that it is in when booting a templated system image.

While many automators disclaim responsibility for proper WinRM setup upon booting of their template, it is exceedingly hard and an eternal effort to try to educate humans to avoid these type of defacto behaviors - so the net result is insecure systems. By making the system comply with defacto human behavior - all the energy and effort to try to counter it is unnecessary and systems are very practically more secure.

More Details:

The Deep Dive Blog Article for this code here: https://cloudywindows.io/post/winrm-for-provisioning-close-the-door-on-the-way-out-eh/

The Code is here: https://github.com/DarwinJS/Undo-WinRMConfig

Mentoring Teammates to Adopt PowerShell

Approach to Moving The Dial On PowerShell Adoption

Situation:

Scope: automated deployment of software to 18,000 windows machines.

The management of the application packaging team wanted the team to move forward to PowerShell. The team was busy under their regular load of work and had stagnated in this effort. I felt they were having a hard time absorbing the productivity losses of trying to migrate their proficiency with the existing VBScript template to PowerShell. Some team members were also hesitant because the well worn VBScript was successfully deploying software to 18,000 windows machines that utilized in 17 language packs.

Task: I felt that the team could be encouraged forward if PowerShell template development was targeted at bridging their proficiency from VBScript. I also felt that enhancing the PowerShell template far beyond the existing VBScript template would help make it enticing to move to PowerShell - productivity losses could be made up for with other gains. Since subsequent releases of packages were regularly rotate between packagers, one individuals conversion activities would result in other team members inheriting an already working PowerShell script for minor updates. Rather than having to mandate the team to use PowerShell, they could be drawn into using it. This was appealing to the management culture of our team leadership.

Action: I partnered with my manager to undertake PowerShell template development as an inline task to my own packaging activities. This approach had several positive effects, most notably: [a] requirements gathering was pragmatic and organic, based on the needs of real packages - not on theoretical ideals, [b] there was no need to rush as there wasn't an entire team taking time off regular activities to "build the ultimate template", [c] the code was tested as part of production package updates with low risk to the overall package portfolio and to individual packages. I was exceptionally responsive to team member requests for help and their suggestions for improvement. As my personal proficiency grew, I could take the first pass at complex packages and commoditize reusable bits into standard template functions.

Results: Over time the template became very rich and cycle time for simple packages was dramatically reduced. Previously decretionary scripting activities (like having an uninstall routine), became standard and "free" for the small effort of completing specific command lines in the template. Many of the previously custom coded items from the VBScript era became easily configurable functionality in the template. Colleagues were able to transition to PowerShell more easily as most of their barriers were removed and they could ease into it either by having my help in converting their package or by inheriting an already working package that had been converted.

Scope: automated deployment of software to 18,000 windows machines.

The management of the application packaging team wanted the team to move forward to PowerShell. The team was busy under their regular load of work and had stagnated in this effort. I felt they were having a hard time absorbing the productivity losses of trying to migrate their proficiency with the existing VBScript template to PowerShell. Some team members were also hesitant because the well worn VBScript was successfully deploying software to 18,000 windows machines that utilized in 17 language packs.

Task: I felt that the team could be encouraged forward if PowerShell template development was targeted at bridging their proficiency from VBScript. I also felt that enhancing the PowerShell template far beyond the existing VBScript template would help make it enticing to move to PowerShell - productivity losses could be made up for with other gains. Since subsequent releases of packages were regularly rotate between packagers, one individuals conversion activities would result in other team members inheriting an already working PowerShell script for minor updates. Rather than having to mandate the team to use PowerShell, they could be drawn into using it. This was appealing to the management culture of our team leadership.

Action: I partnered with my manager to undertake PowerShell template development as an inline task to my own packaging activities. This approach had several positive effects, most notably: [a] requirements gathering was pragmatic and organic, based on the needs of real packages - not on theoretical ideals, [b] there was no need to rush as there wasn't an entire team taking time off regular activities to "build the ultimate template", [c] the code was tested as part of production package updates with low risk to the overall package portfolio and to individual packages. I was exceptionally responsive to team member requests for help and their suggestions for improvement. As my personal proficiency grew, I could take the first pass at complex packages and commoditize reusable bits into standard template functions.

Results: Over time the template became very rich and cycle time for simple packages was dramatically reduced. Previously decretionary scripting activities (like having an uninstall routine), became standard and "free" for the small effort of completing specific command lines in the template. Many of the previously custom coded items from the VBScript era became easily configurable functionality in the template. Colleagues were able to transition to PowerShell more easily as most of their barriers were removed and they could ease into it either by having my help in converting their package or by inheriting an already working package that had been converted.

PowerShell Automation Framework for Enterprise Deployment and Configuration

PowerShell Template Technical Features

Scope: automated deployment of software to 18,000 windows machines.

Some of these features, while technical, were specifically designed to help encourage team proficiency in PowerShell.

Some of these features, while technical, were specifically designed to help encourage team proficiency in PowerShell.

- Discoverable functionality for developers via complete function help, self-documenting printable manual, script function and variable listing functions.

- Developer mode allows all script functions to be used interactively for debugging and rapid prototyping.

- Always handles 32 and 64-bit issues properly (64-bit issues still exist in pure 64-bit desktop and server environments due to the need to support 32-bit applications).

- Automatic elevation when needed.

- Check for Admin rights, running under system account and running under SCCM.

- Easy launcher so users, UAT testers and support technicians can start PowerShell scripts without needing complex instructions about elevation, powershell.exe switches and script parameters.

- No runtime installation requirements.

- Comprehensive logging and error trapping.

Template Walkthrough

Template Code Walk Through:

Self-Describing, Self-Documenting, Discoverable, Console Prototyping, Desired State Functions, Logging.

[Full Screen Capable Player Here]

Use the Table of Contents icon (bullet points) to view what interests you.

Use the Table of Contents icon (bullet points) to view what interests you.

Template Building Manifesto

My Approach to Building PowerShell Libraries and Templates:

Toolsmithing for Productivity

(No Audio)

|

PowerShell Sample Code

|

| ||||||||||||

Root Cause Analysis for Non-Admin Reboot of Production Server

Root Cause Analysis

Situation: A production Citrix server unexpectedly rebooted during operating hours - the Citrix service manager sent my team a screenshot of an SCCM service having requested the reboot. The situation posed a potential finger pointing situation, so the root cause had to be investigated and accurately reported.

Task: Upon analysis of SCCM logs, I concluded that a specific user id had requested an SCCM package to be processed which had a required reboot and was set to allow reboots outside of regular maintenance windows. This conclusion raised suspicion with the Citrix service manager as the user was a non-IT, non-admin user of the Citrix server. I was challenged to investigate the exact path by which the user would be able to generate this action in order to back the conclusion.

Action: I engaged the help of two Citrix team members to co-troubleshoot with me. This had a positive effect of discovering the details together and avoiding possible refutation of data discovery and conclusions by that technical team. We discovered three independent ways a user could launch applications in the context of the server - two of them via the Microstation application. One of these was a custom built launcher application for Microstation users. Judging by the SCCM logs the SCCM notification application was most likely triggered by one of these application startups. At that point the end user likely received a notification from the SCCM notification process that started in the context of the Citrix server. The fact that this EXE had started in the server context would not be obvious to the end user. Clicking the bubble would cause SCCM's Software Center to start - but in the context of the server (again appearing to the end user as if it was their local machine asking them to install something). If the user picked the job in question, SCCM would follow it's normal design of proxying admin rights for non-admin users and execute the job and then follow the instructions to allow reboots outside maintenance windows if necessary.

Results: I was able to create a very detailed root cause explanation report that was already agreed to by technical staff on the Citrix team (they also had an opportunity to review the report to ensure they were happy with the tone). This report also detailed recommendations for closing the vulnerability. The cross team collaboration I initiated had many fold positive effects: [a] building on each other's ideas and avoiding validation testing mistakes, [b] validating inferences as we went, [c] creating a pre-agreed report which avoided refutation attempts and finger pointing and [d] improved the feelings of teamwork between our teams.

Task: Upon analysis of SCCM logs, I concluded that a specific user id had requested an SCCM package to be processed which had a required reboot and was set to allow reboots outside of regular maintenance windows. This conclusion raised suspicion with the Citrix service manager as the user was a non-IT, non-admin user of the Citrix server. I was challenged to investigate the exact path by which the user would be able to generate this action in order to back the conclusion.

Action: I engaged the help of two Citrix team members to co-troubleshoot with me. This had a positive effect of discovering the details together and avoiding possible refutation of data discovery and conclusions by that technical team. We discovered three independent ways a user could launch applications in the context of the server - two of them via the Microstation application. One of these was a custom built launcher application for Microstation users. Judging by the SCCM logs the SCCM notification application was most likely triggered by one of these application startups. At that point the end user likely received a notification from the SCCM notification process that started in the context of the Citrix server. The fact that this EXE had started in the server context would not be obvious to the end user. Clicking the bubble would cause SCCM's Software Center to start - but in the context of the server (again appearing to the end user as if it was their local machine asking them to install something). If the user picked the job in question, SCCM would follow it's normal design of proxying admin rights for non-admin users and execute the job and then follow the instructions to allow reboots outside maintenance windows if necessary.

Results: I was able to create a very detailed root cause explanation report that was already agreed to by technical staff on the Citrix team (they also had an opportunity to review the report to ensure they were happy with the tone). This report also detailed recommendations for closing the vulnerability. The cross team collaboration I initiated had many fold positive effects: [a] building on each other's ideas and avoiding validation testing mistakes, [b] validating inferences as we went, [c] creating a pre-agreed report which avoided refutation attempts and finger pointing and [d] improved the feelings of teamwork between our teams.

Company-wide PowerShell Advocacy

PowerShell Advocacy

Published as: "Be A PowerShell Champion" in Windows NT Magazine

Situation: IT Pros were aware of the importance of PowerShell and taking some formal classes, but didn't seem to be able to make PowerShell a daily part of their IT activities. The management of my IT group felt there may be an opportunity to help the situation if some type of effort was made to get people together to grow in PowerShell.

Task: Having a long background in technology training I suspected that the main challenge was around the informal learning required to take make a skill into a daily habit. I proposed that we have an informal group in our team and help them get up to speed and lead by example then branch out to the rest of IT. The branch out consisted of reinforcing regular, daily, informal learning by not emphasizing formal class room lessons - but instead used peer code sharing and an assisted coding clinic for work on real work scripts. Having a scheduled event gave individuals and their managers an excuse to invest in their skills on a regular basis.

For code sharing I would co-host with the person giving the talk, this helped:

Below is a video of the kick off presentation for the group - the main goals were to [a] reinforce the legitimacy of informal learning, [b] build the concept that although we couldn't formalize informal learning, we could give it a lot of help via a peer group.

Action: For the meetings I had to identify and coerce possible presenters, MC the meeting, ensure resources were posted and be a regular poster in the Yammer group. I also had an open door policy for assisting anyone in the organization with getting through road blocks on scripts they were working on - whenever possible I would drop everything else to give them help right when their mind was primed for solidifying new skills.

Results: Individuals became aware of who else was active.

Situation: IT Pros were aware of the importance of PowerShell and taking some formal classes, but didn't seem to be able to make PowerShell a daily part of their IT activities. The management of my IT group felt there may be an opportunity to help the situation if some type of effort was made to get people together to grow in PowerShell.

Task: Having a long background in technology training I suspected that the main challenge was around the informal learning required to take make a skill into a daily habit. I proposed that we have an informal group in our team and help them get up to speed and lead by example then branch out to the rest of IT. The branch out consisted of reinforcing regular, daily, informal learning by not emphasizing formal class room lessons - but instead used peer code sharing and an assisted coding clinic for work on real work scripts. Having a scheduled event gave individuals and their managers an excuse to invest in their skills on a regular basis.

For code sharing I would co-host with the person giving the talk, this helped:

- reduce their prep time,

- ensure that newer learners were not left in the dark when the presenter assumed knowledge in specific areas,

- allowed teasing out valuable information the presenter knew, but didn't know to share.

Below is a video of the kick off presentation for the group - the main goals were to [a] reinforce the legitimacy of informal learning, [b] build the concept that although we couldn't formalize informal learning, we could give it a lot of help via a peer group.

Action: For the meetings I had to identify and coerce possible presenters, MC the meeting, ensure resources were posted and be a regular poster in the Yammer group. I also had an open door policy for assisting anyone in the organization with getting through road blocks on scripts they were working on - whenever possible I would drop everything else to give them help right when their mind was primed for solidifying new skills.

Results: Individuals became aware of who else was active.

- Conducted monthly Forums where company PowerShell experts were encouraged to share their expertise by doing code walk throughs.

- Networked with and convinced internal company speakers to present.

- Each Forum was followed by a coding clinic where anyone could receive help from experts on production code they were working on.

- Published the template I maintained for my group to everyone in the company. Template specifically targeted at onboarding VBS coders to PowerShell.

- Established central location for PowerShell resources and populated it with free training resources, editors, tools and outputs from meetings.

- Created and blogged on Yammer (private, internal "Facebook" for businesses) group to encourage exchanges and distribute information.

The PowerShell Forum

A Community Initiative To Support PowerShell Proficiency in IT

[Full Screen Capable Player Here]

Use the Table of Contents icon (bullet points) to view what interests you.

Use the Table of Contents icon (bullet points) to view what interests you.

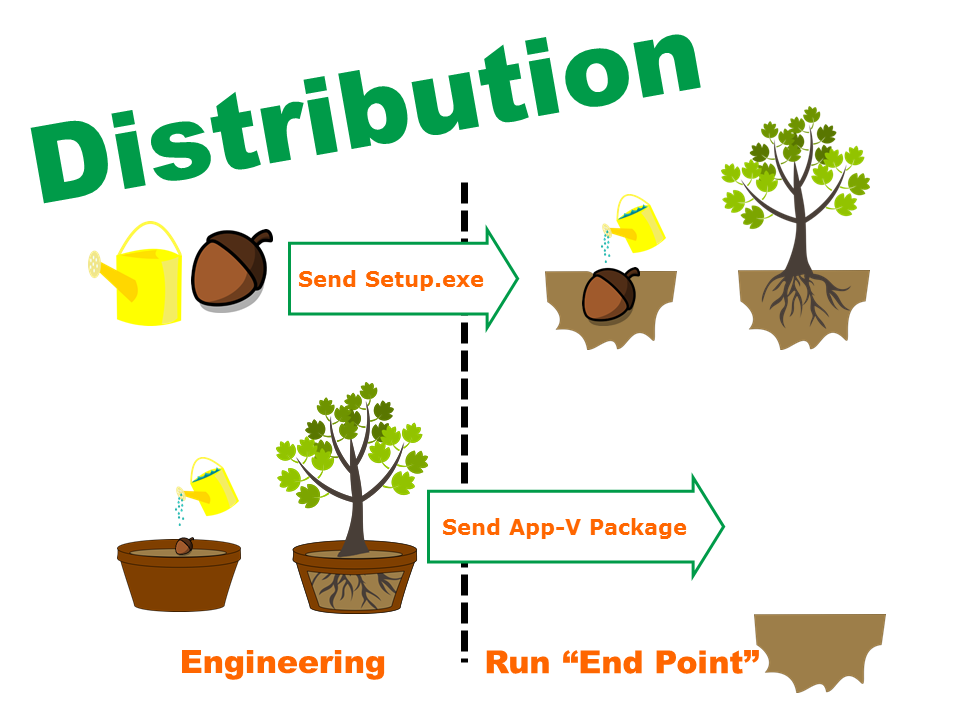

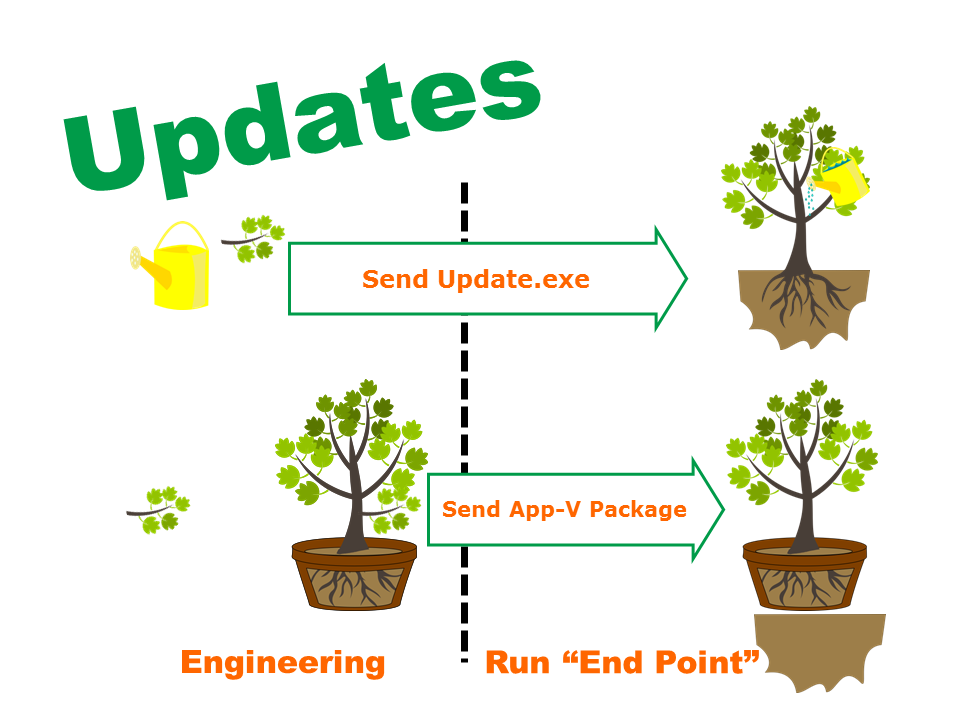

Visual Metaphor: Redirecting a "Corporate Initiative" Freight Train in 10 Minutes

Visual Presentation Concepts

Situation: A group of IT and project managers was to meet with my group to discuss whether to virtualize SAP GUI (a rich, locally installed client for doing almost anything in SAP). There are significant risks and losses in agility that virtualizing an application of this class carries - but they require a lot of explanation of how existing technology limitations and assumptions end up creating more losses than gains. My sense was that even after our group poured uncountable hours into pulling off the desired feat - we'd then come under fire for being slow on preparing new versions, on integrating plug-ins and on why moving forward had resulted in more difficulty in troubleshooting. It was likely that a step forward in technology would result in a step backward in ability to service the application in an agile way.

Task: I felt that using a visual metaphor would enable the emotional impact of the concept to be transmitted without the need for slogging through endless technical details and trying to bubble them up to business impact. It would take too long, it would have far too many rabbit holes and the overall impression would be lost in the dissection of the problem components. I had previously used the metaphor of "Trees Are Like Applications" to help people understand the concepts and limitations of Application virtualization - little did I know I was about to learn a new level of the Eureka power of metaphors that I had not tapped before. I knew I was on a fairly solid track when practice presentations enabled my wife and children to be able to understand my points.

Action: By following Guy Kawaski's presentation principles I was able to create a presentation consisting of relevant graphics with few very related words. I built the presentation to be graphically visual with light animation so that I would be in control of how the story unfolded. I also used animation for any bullet point lists - not for the sake of movement, but to keep the audience's attention focused, on the same page and validate understanding before moving forward.

Once I had visually built the baseline understanding of the metaphor, I displayed a key slide that created a watershed of instant understanding. I then engaged the group in by having them list the difficulties of managing trees. Inevitably every difficulty listed had a direct and self-evident metaphorical equivalent to the technical task at hand.

Results: The results thoroughly astounded me as the understanding imparted by the presentation allowed the group to completely avoid what I anticipated would be an edgy confrontation around my group simply being a blocker to the progress of business. The group's previous pro-virtualization stance had been sourced from a months long global IT architecture alignment initiative and it was completely shifted away from this course within the 30 minute presentation.

Task: I felt that using a visual metaphor would enable the emotional impact of the concept to be transmitted without the need for slogging through endless technical details and trying to bubble them up to business impact. It would take too long, it would have far too many rabbit holes and the overall impression would be lost in the dissection of the problem components. I had previously used the metaphor of "Trees Are Like Applications" to help people understand the concepts and limitations of Application virtualization - little did I know I was about to learn a new level of the Eureka power of metaphors that I had not tapped before. I knew I was on a fairly solid track when practice presentations enabled my wife and children to be able to understand my points.

Action: By following Guy Kawaski's presentation principles I was able to create a presentation consisting of relevant graphics with few very related words. I built the presentation to be graphically visual with light animation so that I would be in control of how the story unfolded. I also used animation for any bullet point lists - not for the sake of movement, but to keep the audience's attention focused, on the same page and validate understanding before moving forward.

Once I had visually built the baseline understanding of the metaphor, I displayed a key slide that created a watershed of instant understanding. I then engaged the group in by having them list the difficulties of managing trees. Inevitably every difficulty listed had a direct and self-evident metaphorical equivalent to the technical task at hand.

Results: The results thoroughly astounded me as the understanding imparted by the presentation allowed the group to completely avoid what I anticipated would be an edgy confrontation around my group simply being a blocker to the progress of business. The group's previous pro-virtualization stance had been sourced from a months long global IT architecture alignment initiative and it was completely shifted away from this course within the 30 minute presentation.